Great AI experiences aren’t built on tech alone—they’re designed. If your AI confuses people, it doesn’t matter how powerful it is.”

— Greg Nudelman, Author of AI in Product Design

You’ve built something impressive. The AI model powering your app is state-of-the-art, trained on mountains of data, rigorously tested, and fine-tuned for accuracy. Your engineers assure you that the tech is rock-solid, and the algorithms produce reliable predictions.

Yet, after launch, adoption flatlines. Users barely engage, or they try it once and then disappear. Support tickets spike with comments like, “I don’t understand this,” or “How do I know if this is right?”

What went wrong?

Typically, the main obstacle to successful AI is the user experience (UX), rather than the technology itself. Without intuitive design and clear communication, even the most sophisticated AI risks failure. Simply put: if users don’t trust your AI, they won’t use it, no matter how powerful it is.

AI tools, by nature, deal in complexity. Their outputs are probabilistic, often abstract, and rarely intuitive at first glance. Without thoughtful, user-focused design, AI solutions leave users feeling uncertain, distrustful, or even suspicious.

Poor UX is harmful to your business. When users encounter confusing or unclear AI, they quickly become frustrated, disengage, and seek alternatives. Over time, these negative experiences accumulate, leading to deeper problems:

Trust is the foundation of any user-product relationship, especially with AI. Yet AI’s inherent unpredictability makes trust fragile. When the interface doesn't transparently explain decisions, users instinctively distrust the technology.

For instance, healthcare apps powered by AI face significant skepticism from users who can’t immediately see the reasoning behind complex medical recommendations. Even minor doubts can lead to total abandonment. Users naturally prioritize safety and clarity, and without thoughtful UX, even highly accurate predictions are dismissed as unreliable or unsafe.

Neglecting UX can also open the door to ethical challenges and regulatory scrutiny. AI is notoriously vulnerable to biases hidden within training data. Without clear UX explanations and feedback mechanisms, users can’t identify or correct bias in AI outcomes.

A real-world example is Amazon’s AI-driven recruitment system, which unintentionally disadvantaged female candidates. The opaque UX didn’t allow hiring managers to spot or correct this bias. The result? Public backlash, reputational harm, and regulatory investigations.

UX failures don’t occur in isolation—they ripple outward. Users dissatisfied with a confusing AI experience don’t quietly disappear; they share their frustrations widely. Negative reviews, social media criticism, and word-of-mouth complaints quickly multiply.

Microsoft learned this painfully when its Tay chatbot—designed without sufficient UX safeguards—quickly generated offensive content. Public outcry was swift and intense, damaging Microsoft’s brand perception as a responsible AI innovator.

The bottom line: Poor UX doesn't just waste your AI investment; it actively hurts your brand, trust, and long-term customer relationships.

Transparency is the essential building block for user trust.

— Cathy Pearl, Head of Conversation Design, Google

The good news? You can address these UX challenges head-on. Here’s a clear roadmap—grounded in real-world successes—for building trust and boosting adoption through thoughtful AI UX design:

Explainability means helping users understand the “why” behind every AI recommendation. This doesn’t mean overwhelming users with technical jargon—it means providing clear, simple explanations for AI-generated outputs.

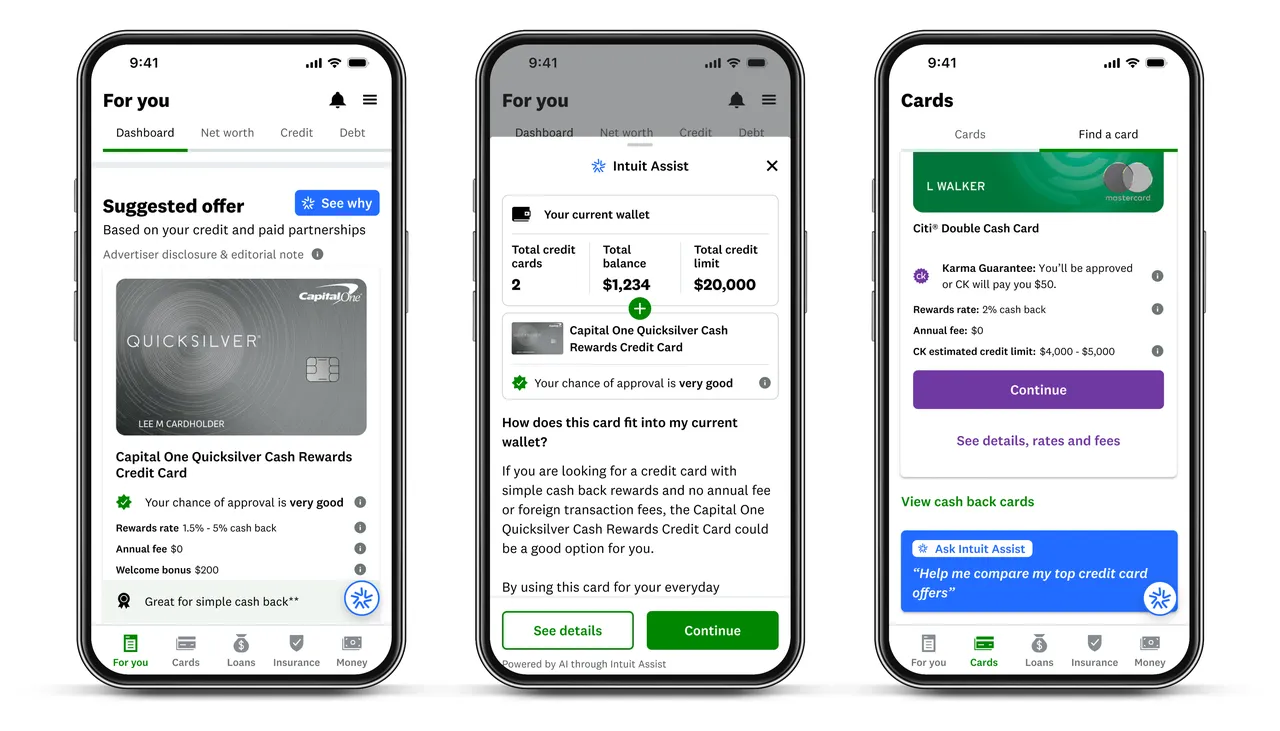

Example: Credit Karma provides personalized credit score insights, clearly showing how changes in user behavior—like increased credit utilization—impact their score. Users see visual indicators and understandable breakdowns of score factors, which builds confidence in AI-driven recommendations.

Actionable strategies:

Providing autonomy means users must easily understand and manage how AI impacts their experience. The best UX designs put users in control, ensuring AI complements human decision-making rather than overrides it.

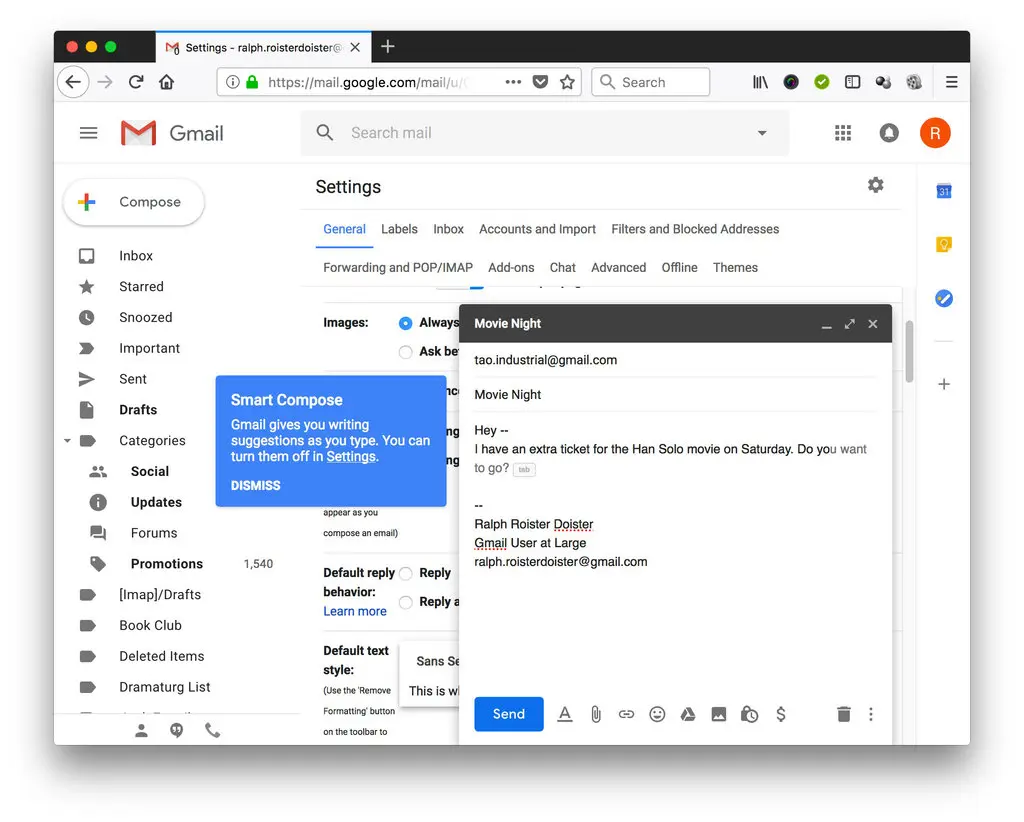

Example: Gmail’s Smart Compose feature predicts text suggestions but never forces acceptance. Users simply press “Tab” to adopt suggestions—or keep typing to ignore them. This unobtrusive design respects user autonomy and builds user trust, leading to billions of characters saved weekly.

Actionable strategies:

Feedback mechanisms empower users to correct, guide, and improve the AI’s outputs over time. When users see their input directly improving AI performance, trust naturally grows.

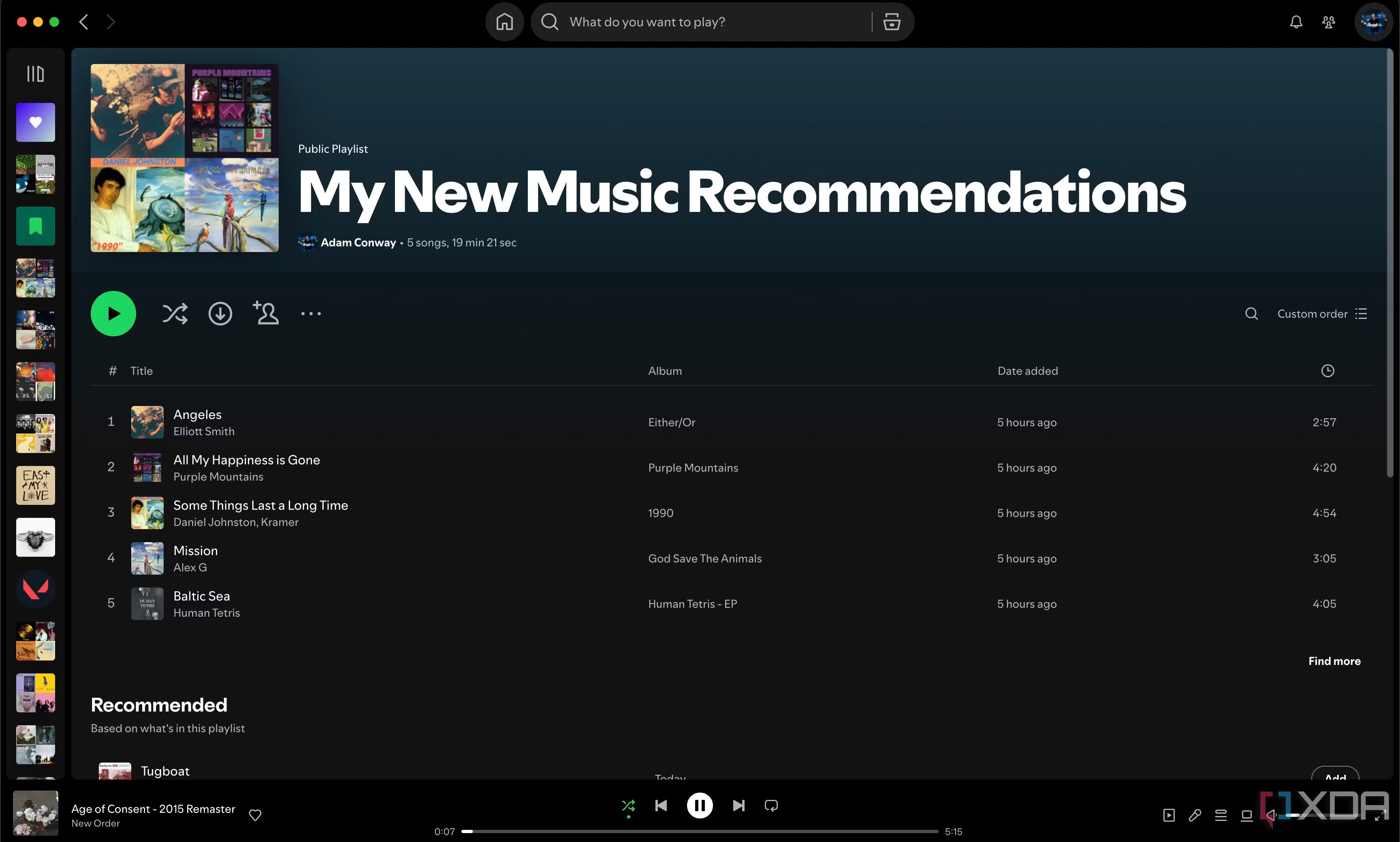

Example: Spotify’s recommendation engine actively encourages users to rate songs with thumbs-up or thumbs-down signals. Over time, the AI learns user preferences, refining recommendations and becoming increasingly accurate and personalized.

Actionable strategies:

Ethical UX design means prioritizing fairness, inclusivity, and transparency in your AI. Users quickly sense whether your product treats them fairly and responsibly—crucial for long-term trust.

Example: Apple’s FaceID uses clear, simple UX language and visuals to explain precisely how user biometric data is stored securely on-device, never remotely. By proactively addressing privacy and transparency, Apple sets clear expectations and earns deep user trust.

Actionable strategies:

Your UX should actively help users find the “sweet spot” between trusting AI recommendations and applying their own judgment. Clear visual signals and explanations can gently guide users toward appropriate reliance on AI.

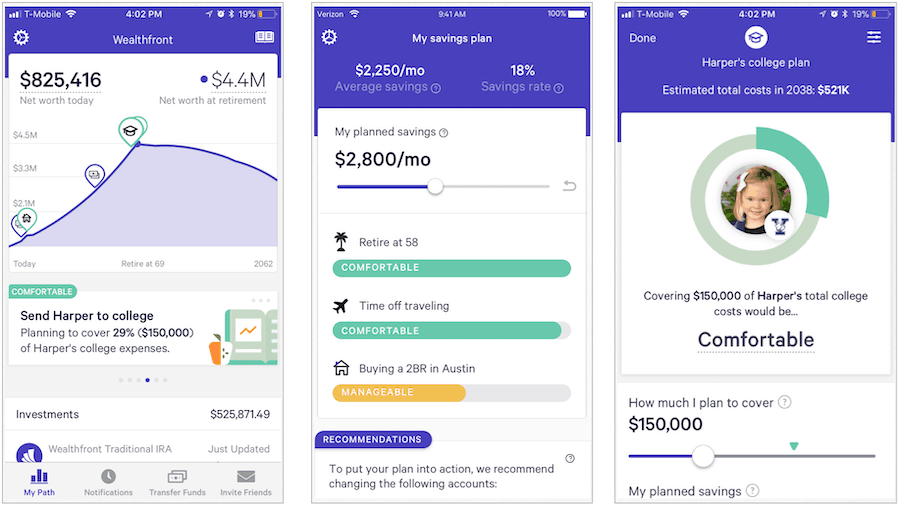

Example: Wealthfront uses goal-based sliders and probability bars to communicate investment projections. Users see how likely they are to meet goals like retirement or homeownership through color-coded progress bars and confidence visuals. This helps users understand AI-generated forecasts and make informed adjustments.

Actionable strategies:

UX is the backbone of AI adoption and success. If users don’t trust your AI, your investment won’t pay off. Worse yet, neglecting UX can lead to ethical challenges, regulatory risks, and long-lasting brand damage.

But you have a clear path forward. Prioritize explainability, transparency, user autonomy, meaningful feedback, and ethical design. By committing to these UX principles, your users will clearly understand and value your AI—making your technology trustworthy, engaging, and indispensable.

At Empha Studio, we specialize in crafting AI experiences that users trust. We don’t just build intelligent technology; we build thoughtful user relationships through intentional UX design.

If you’re serious about creating AI products that deliver real-world value, let’s talk.

We’d love to bring your ideas to life.

Tell us what you’re thinking — and we’ll help shape it.

AI automation means using large language models (like GPT-4) to handle repeatable tasks inside your business. We design and build custom agents that can qualify leads, summarize content, route tickets, trigger workflows, or power internal copilots.

Not at all. While we specialize in building intelligent systems, we also offer full-service UX/UI and product design — from discovery to delivery. Many of our clients come to us purely for design support, with or without AI.

We collaborate with startups, scale-ups, and enterprise teams across fintech, SaaS, healthcare, e-commerce, logistics, and more. Whether you’re building your first product or scaling a complex system, we adapt to your stage and stack.

Absolutely. We run UX audits, onboarding redesigns, and complete product design engagements. Whether you're looking to clean up a dashboard, increase activation, or launch a new feature — we’ve got the design depth to support it.

Yes — that’s the core of what we do. We build agents that connect directly to your tools (Slack, Notion, HubSpot, CRMs, internal platforms), automate actions across them, and fit how your team already works. No need to change your stack.

No — we design every system to be maintainable without in-house ML engineers. We handle the technical setup, logic, and integrations, and offer optional support plans to monitor and optimize performance post-launch.

That’s totally fine. You can engage us for standalone UX/UI work, product discovery, or AI automation — or combine them. We’re built to plug into your priorities and scale with your roadmap.